VocaLamp is a prototype smart lamp that adapts lighting, reads word definitions, and helps parents reinforce learning. This was created as a project for my graduate Hardware/Software Lab 2 class at GIX and continued through UW’s CoMotion incubator program. The objective was to help parents and children connect over distraction-free vocabulary learning

One 9-week Quarter

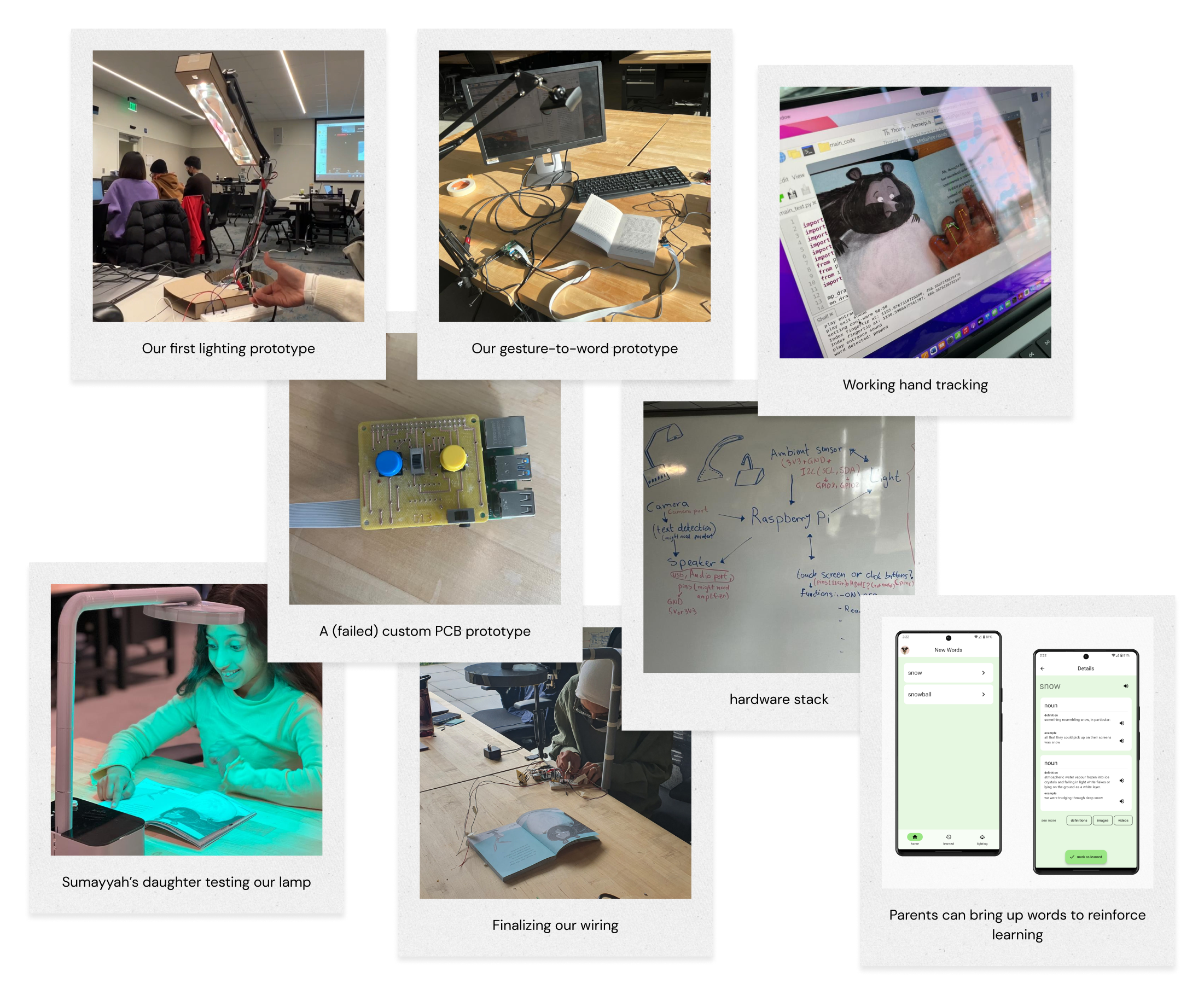

My grad program had two Hardware/Software Lab classes where we have nine weeks to go from idea to working prototype. VocaLamp started as one of these projects. Our initial idea was a smart bookmark that would sync progress from a physical book to an eBook. After two weeks of research and sketching, our advisor changed his mind on the idea and we had to start over. Having researched the benefits of reading physical books, we pivoted to an IoT children's lamp. I was the only software engineer on a team with Sumayyah (communications), Vinitha (architecture), Abhi (UX), and, working remotely from China, Yiran (architecture).

Throughout the quarter, my team conducted research to learn more about environmental and temporal effects on learning while reading. I started with two weeks of secondary research focused on physical vs digital reading. Next, I conducted a series of interviews to learn about reading habits and preferred environments and sensory stimuli. Lastly, I conducted contextual inquiries, learning about study habits and useful strategies for long-term learning. Overall, we found that sensory and environmental factors play a huge role in long term recall. "Setting the mood" for focus and repeating a stimuli while studying can build lasting connections in memory.

We had three milestones to present work-in-progress prototypes. Milestone 1 consisted of working automatic lighting and basic text recognition. Milestone 2 was basic gesture recognition with triggered lighting changes. Finally, for Milestone 3 we demoed a prototype with point-to-word gesture recognition, spoken definitions, and syncing with a parent companion app. We streamlined our approach throughout the process, cutting a planned screen and child-companion app to reduce distractions. We wrote our main lamp code in python and developed our simple prototype app with Flutter and Firebase. Milestone 3 was almost derailed by myself having to quarantine with covid, but we figured out a system of controlling our Raspberry Pi via VNC on a laptop I controlled via Zoom on my laptop (we made it work!).

Though our time working on VocaLamp for school was over, we brought our prototype back online to conduct preliminary user testing. We observed a few parents and their children using the lamp together, identifying areas of future development. We learned that native speakers of English may not benefit as much from our lamp, but ESL students and their parents found it particularly useful. We explored monetization strategies and competitive advantages as members of UW CoMotion's Winter 2023 cohort.

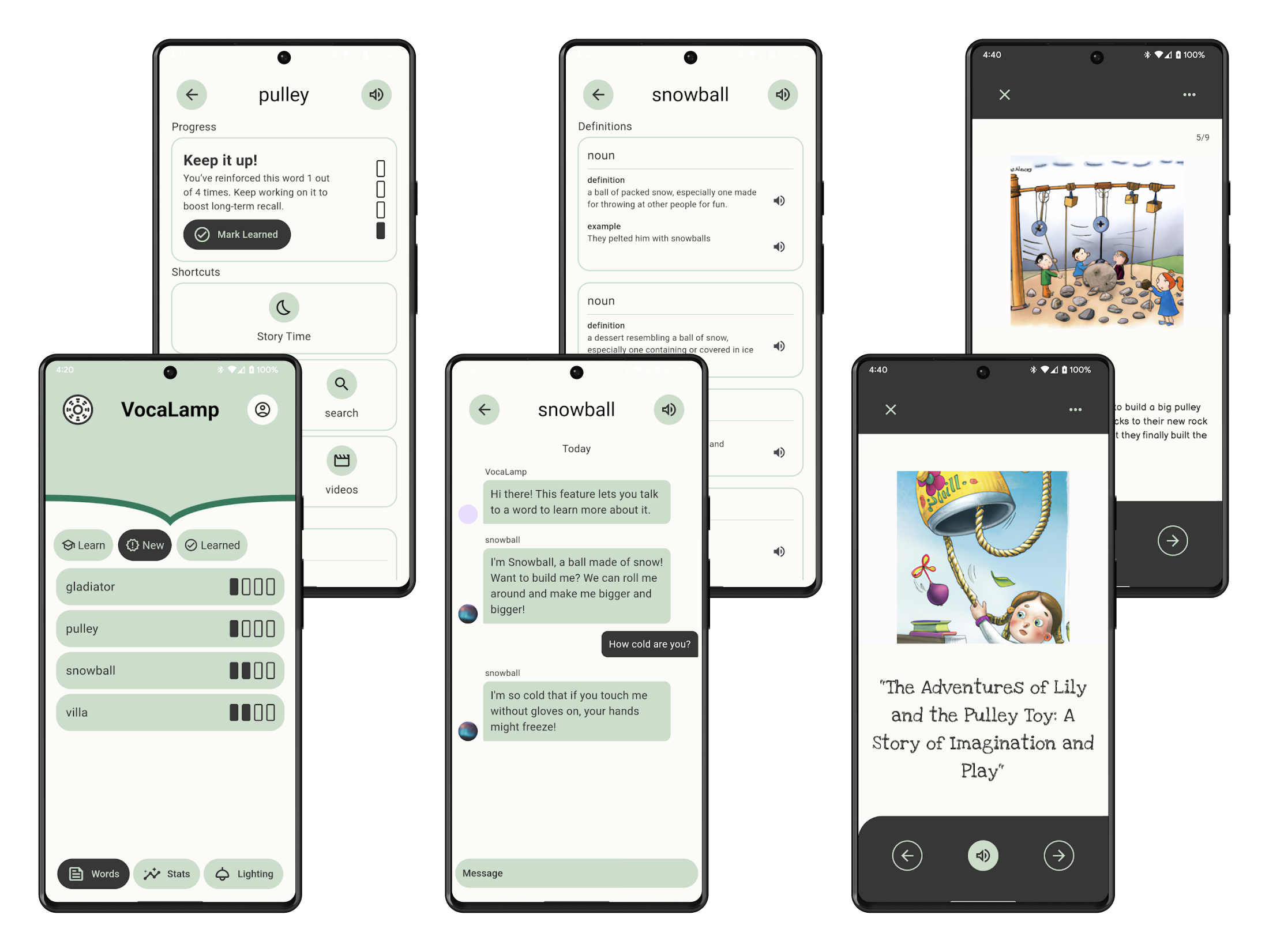

After our teamwork on the app was finished, I had some ideas for further development on our companion app. We had discussed adding in-app content for parents to engage with their children around the words they had learned. First, I added simple Google search links to find external content. Next, I replaced our placeholder definitions and examples with OwlBot's dictionary API. Eventually, I redesigned the app in its entirety, adding a proper back-end and timed learning progress. I also added two OpenAI-enabled features. The first of these is a GPT-based chat experience, allowing parents and children to speak to a new word personified by GPT. For instance, a child can ask a villa (a word prompted during user tests) how big it is, or who lived in it. The second AI feature is a generated bedtime story, complete with illustrations. Parents can generate engaging stories that help their child learn the word in-context.